Introduction

A rule engine is a software tool that enables developers to model the world in a declarative way. In that sense, it functions as a facilitator of abstraction, a process that distills complex realities into simpler, more recognisable patterns. A useful abstraction is one that removes things we don’t need to concern ourselves with in a given context. In software development, we refer to this abstraction as declarative programming.

Taking this into account, for software developers, a rule engine is useful only if it liberates them from expressing the rule in the code. Therefore, the goal of a rule engine is to bring this abstraction to the higher level. Any time a developer fails to solve a particular rule (use case) with a rule engine, she will eventually be forced to “solve it in the code anyway” – which means that she will have to now manage two abstractions in parallel, one in the rule engine and one in the code, which is a nightmare.

In order to avoid this pitfall, it is commonly accepted that we should use rule engines only if appropriate, or not use them at all. Over the past decades, that has become a self-fulfilling prophecy. Driven by the idea that if something doesn’t work, we’ll have to sort it out in the code anyway, we have set limitations to what rule engines can do while at the same time defining the set of problems which we feel are suitable for being safely addressed by rule engines.

Much of the power of rules engines starts from the fact (or promise) that it allows us to make more accurate decisions in real-time. An advanced rule engine can fulfil that role by ingesting real-time data, reasoning on that data and invoking automated actions based on the result of that reasoning process.

In this post we will dive a bit deeper into the technology of the Waylay rule engine and explain why we believe that Waylay is the ultimate rule engine for IoT and some other verticals - such as financial services where modelling complex logic is a must.

Now, before we get more specific about Waylay’s technology, let’s have a look at the existing solutions.

Decision trees/tables

A popular way of capturing the complexity of conditional rules is to make use of decision trees or tables (they are not exactly the same thing, but for the benefit of this blog we will use them in the same category). Decision trees are graphs that use a branching method to illustrate every possible outcome of a decision. There are several products on the market that offer rules engines based on decision trees/tables. Decision trees and decision tables are used in various fields and applications across different domains, such as finance where they can be used for credit scoring, risk assessment, and investment portfolio optimisation, or in healthcare, where they are often used in medical diagnosis systems to assist doctors in identifying diseases or conditions based on patient symptoms and medical history.

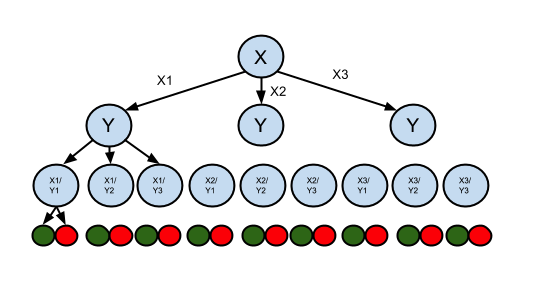

To illustrate, let’s imagine we want to model a problem using decision trees with the following example: assume you are asked to create a rule from two input data sources (e.g. temperature and humidity). Each data source measurement is sampled in one of three states (e.g. low, medium and high). The final decision of the reasoning process is a TRUE/FALSE statement, that depends on these 2 inputs.

As the figure above shows, this leads to 18 leaf nodes (red/green dots) and overall 31 nodes for only two variables! The depth of the tree grows linearly with the number of variables, but the number of branches grows exponentially with the number of states. Decision trees are useful when the number of states per variable is limited (e.g. binary YES/NO) but can become quite overwhelming when the number of states increases. Anyone who tried this approach before knows you can end up with something of this sort:

Debugging becomes a real challenge and updating the logic over time a daunting task. Things get even more complicated when the state of the variables depends on a threshold or depends on more complex computations. Communicating the rationale of the logic to others (whether in the department, project partners or customers) requires you to label every edge, expressing that specific “sub-rule” in the graph. Others would then need to trace the tree path top/down to figure out the logic that is expressed this way.

One thing that also often gets swept under the carpet is that decision trees have a problem of expressing majority “voting”, where for instance 2 out of 4 conditions needed to be TRUE in order to pass a particular criteria.

More elaborate blog about this problem, and how Waylay can be used as a powerful alternative for use cases that are often modelled with decision trees, you can find in the following post: The curse of dimensionality in decision trees – the branching problem

Flow engines

Flow engines are an alternative technology that has been used for designing, modelling, and executing workflows or business processes. With flows you have two additional problems to deal with: parsing of the message payload somehow becomes part of the “rule”, and more importantly, it constrains the logic designer to think in a linear way, from left to right, following the “message flow”. Interesting problem arises when two inputs come at different times. How long do you wait for the next one to arrive before deciding to move on in decisions? How long is the data point/measurement valid?

Flow engine has no notion of states and state transitions. Combining multiple non-binary outcomes of functions (observations) in the rule is still possible, but must be coded in every function where it is applied. That also implies that you have to branch at every function where you need to model a multiple-choice outcome. This leads to extremely busy flow graphs that are hard to follow, especially since the logic is expressed both in the functions themselves and in their “connectors” - path executions. These connectors somehow suggest not only the information flow but also the decisions that are being taken.

Therefore, flow engines are very useful tools in domains of "pipeline problems" - often found in ETL/DEVOPS tools, but have a very limited scope when it comes to being used as a generic rules engine.

CEP engines

Complex Event Processing (CEP) engines are also popular in the IoT world. CEP allows for easy matching of time-series data patterns coming from different sources. CEP suffers from the same modelling issues as trees and other pipeline processing engines. However, it frees developers from dealing with context locking, a common issue in use cases where logic combines inputs from different sources.

Stream Processing engines

Stream processing engines, such as Flink or Spark are other alternatives. It not only processes streams of data at scale, but also allows “querying” that real time data using SQL-like syntax. This ability makes stream processing engines a viable alternative to CEP platforms, since they allow you to create simple rules that can run within stream “windows” of time and make decisions with the ease of SQL queries. In our view, these are good alternatives when it comes to data aggregation/event processing and data analytics (batch and stream) platform – but they are not the rules engine per se.

One issue that is often forgotten is that stream processing engines are designed to make "decisions" based on data which is in the data stream. Now, that at first sounds as an obvious and non-limiting factor, but if the decision that the rule has to make is based on some other parameters or values that come from databases or API's (customer/business or asset info), you simply can't bring these things into the rule definition. Stream processing engine can't just block the execution for a while and "ask" for some more inputs on which rule needs to be evaluated.

Stream processing rules engines are typically used for applications such as algorithmic trading, market data analytics, network monitoring, surveillance, e-fraud detection and prevention and real-time compliance (anti-money laundering). However, as the demand for rules engines that enable seamless integration with machine learning (ML) models and external APIs continues to grow, the scope and utility of stream processing engines are increasingly getting constrained.

BPM or FST(Finite State Machine) engines

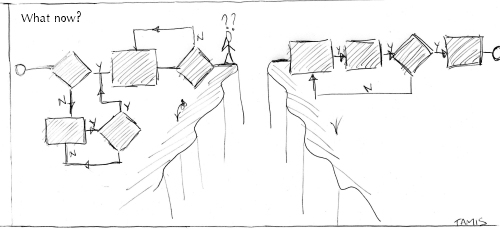

A state machine can be used to describe the system in terms of a set of states that the system goes through. A state is a description of the status of a system that is waiting to execute a transition. A transition is a set of actions to be executed when a condition is fulfilled or when an event is received. AWS Step functions for instance is a "FSM-type" of the rules engine. Camunda is another example of BPM based engines, and even though BPM's are not strictly speaking positioned as the rules engine, for the completeness sake, it is also given here as a possible way to implement a rule. One benefit of FSM is that the concepts are easy to grasp by different types of users. Having said that, one of the issues with BPM engines is that your logic often gets broken somewhere in the middle, as illustrated in this cartoon by @MarkTamis:

BPM was invented when business processes were rigid and not changing for years – they were not meant to be used in dynamic environments. Another often forgotten limitation of BPM engines is that they are designed to process one event at the time, making them suitable in places where we want to respond to a given event (such as an alarm or a document which needs post-processing), but they are by no means suitable as generic rules engines. Therefore FSM based rules are often found in applications such as field force or customer care applications or places where tools need to respond to an alert (security etc.). Similarly, BPM engines are often found as a primary tool in RPA solutions, for document processing or market automation.

Combining multiple non-binary outcomes and majority voting is not possible with FSM engines. One thing often overlooked with FSM is that states imply transitions, that is to say, the only purpose of having something modelled as a state is to navigate a particular decision flow. A direct result of that is that FSM lacks readability as rules become more complex, or when a particular corner case needs to be modelled as a state. Since FSM is capable of executing only one transition at a time, when a user tries to introduce events that might happen under certain conditions, she needs to add a new state. When the number of states becomes too large, the readability of the state machine drops significantly.

Conclusions on the current state of the art

Therefore, in our opinion, current rules engines have some serious limitations, as listed below:

- The logic created with these rule engines is hard to simulate and debug. That is more of a symptom, the underlying reason is very much related to the explainability limitations related to modelling complex use cases

- Current rule engines don’t cope well with dynamic changes of the environment.

- All of them have difficulties combining data from physical devices/sensors (mostly PUSH mode) and data from the API world (mostly PULL mode). As described earlier, being able to react on real time data, while also being able to fetch at any given time additional information for rule evaluation is an extremely hard problem to solve (one may argue even without using rules engine)

- The logic representation is not compact, making debugging and maintenance more complex.

- These rule engines don’t provide us with easy ways to gain additional insights: why a rule has been fired and under which conditions?

- They can’t model uncertainties, e.g. what to do when sensor data is noisy or is missing due to a battery or network outage.

- Expressing time constraints or window conditions

- Mixing rules engines with ML learning models, in use cases such as anomaly detection, predictions or optimisation problems

The best rules engine

Or so we think. 🙂 The Waylay rules engine belongs to the group of inference engines, and it is based on the Bayesian Networks. What does this mean? Without going too much into maths, let’s just say that every node in the graph infers its state to all other nodes, and that each node “feels” what other node is experiencing at any moment in time. If you wish to know more about our vision of using Bayesian Networks in the IOT context, please have a look here: A Cloud-Based Bayesian Smart Agent Architecture for Internet-of-Things Applications

- Unlike flow rule engines, there is no left-right input/output logic. Information flow happens – in all directions, all the time.

- Unlike decision trees, the Waylay engine does not model logic by branching all possible outcomes.

- Unlike flows or pipes the Waylay engine does not use “injector nodes” or “split” input/outputs nodes.

- Unlike BPMs, the Waylay engine is not directly wiring the control flow.

So how exactly does the Waylay rules engine work?

So, you may wonder, how do you work with a rule engine like that?

At Waylay, we've introduced a rules engine concept embodied as a smart agent. This agent works alongside a set of sensors and actuators, represented as stateless functions. These sensors can be thought of as objects with specific states, such as doors (open or closed) or HVACs with its data and conditions or customer records or actions such as alerts, emails or new database records. This abstraction frees you from immediate logic concerns during the development of new library of functions.

Moreover, this approach facilitates role separation between those responsible for writing these small code snippets and those in charge of knowledge modelling for rule composition. Alternatively, you can view this from a low-code perspective, where sensor code snippets become low-code functions. In this view, Waylay's rules engine transforms into a serverless orchestration engine, as described in this post.

This way of inference modelling also allows both push(over REST/MQTT/websockets) and pull mode (API, database..) to be treated as the first class citizens. For the engine, that makes no difference, as soon as the new insight is provided, it is inferred to all other nodes in the network. The best way to picture this is to imagine that you are looking at the raindrops falling on the surface. What you will notice is that the “communication” between drops resembles a wave function and that the size of the circle is proportional to the time the drop has fallen (bigger the circle, earlier the drop has fallen). Also you can notice that the wave amplitude is getting weaker over time.

Another important aspect of the Waylay rule engine is that it allows compact representation of logic. Combining two objects/variables, as in the example above about decision trees, is simplified by adding one aggregation node. The relation between variables and their states as described above can be expressed via boolean representation. That results in a graph with 3 nodes only (compared to 31 nodes in the case of a decision tree, as described above). More about this in some future blog posts.

In more advanced use cases, Waylay also enables probabilistic reasoning. In that case, you assign actuators to fire when a node is in a given state with a given probability. Moreover, we can as well associate different actuators to different possible likelihood outcomes for any node in the graph. This feature enables some interesting use cases, which deserves separate blog posts, so more about that later.

All sensors, actuators and logic are stored as templates. That is also the place where reasoning engine resides. When we put all things together, what we get is a smart reasoning platform with compact logic for easy maintenance, with dynamic workflows in fully async mode, using push/pull mode of operation, that is integration extendable and not to forget, built in the cloud, for the cloud.

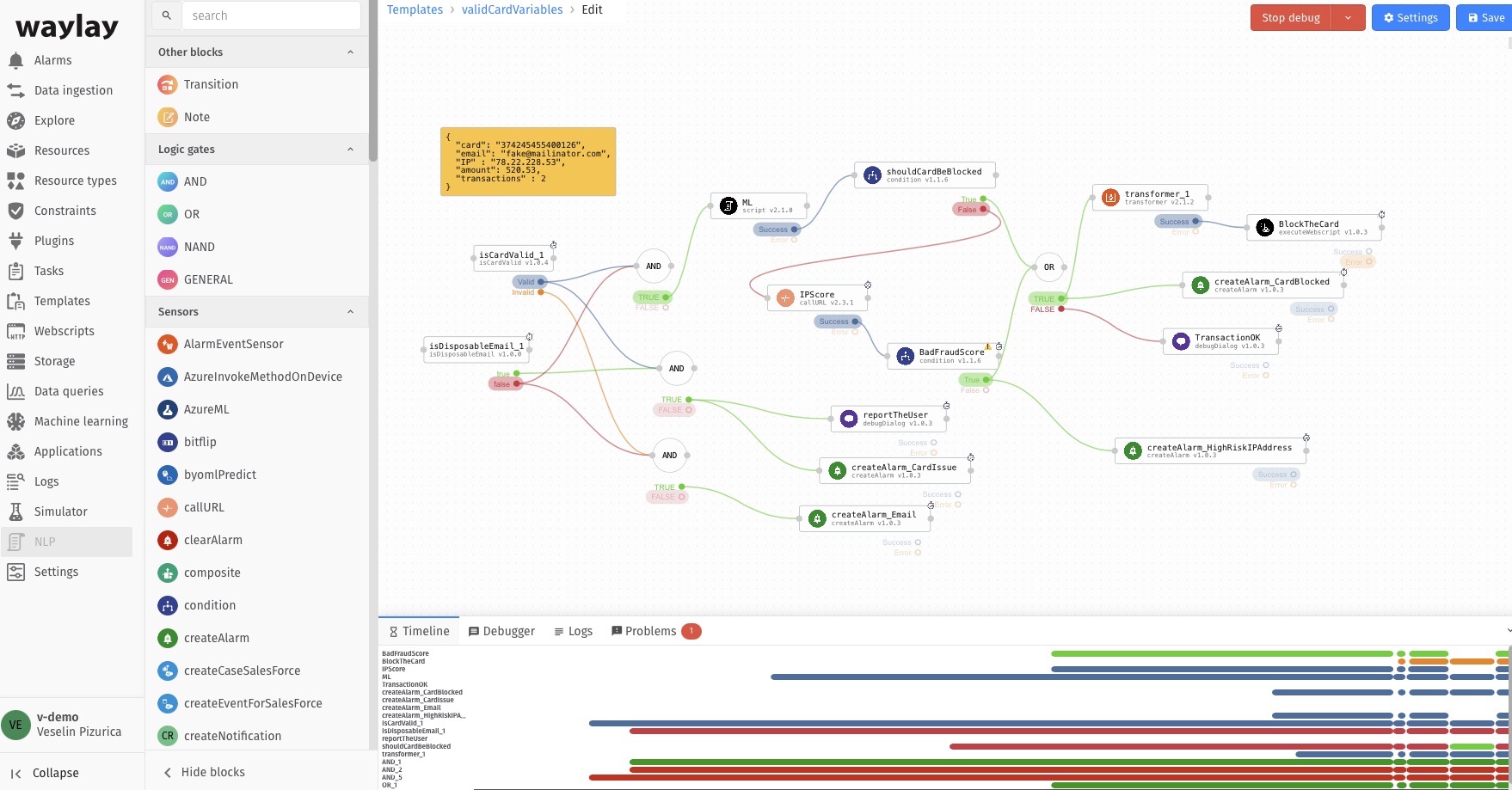

Have a look in the design editor (picture below), and try to read with us what you see on the screen:

“If the payment card is not valid, or if the purchase originates from the temporary payment stop the payment. Otherwise run the payment through the ML model, and if the payment should proceed, check one more for the IP address, to make sure that the location from which the payment has originated is not compromised.” One of many stories you can build and tell, only looking at our designer.

A great thing about Waylay’s platform is that the web designer allows adding new actuators and sensors at runtime. You can even create and simulate new rules before having sensors and actuators implemented. Also, every sensor/actuator and design logic has a template support – something you can share with other team members, domain experts and customers. The Waylay rule engine with all functionality is accessible via REST/MQTT or websocket interface, and our web designer is nothing more but a web UI developed on top of a REST interface. Our engine also includes a small subset of CEP processing unit, allowing formula computation and pattern matching on the level of the raw data or observed node states.

For a comparative guide to rules engines for IoT, have a look at our extensive benchmark evaluation over here

To learn more on the rule engine benchmark and see how you can evaluate any rule-based technology yourself, have a look at our white paper How to Choose a Rules Engine. It defines three technology criteria and four implementation criteria that are important to the IoT domain.

To find more about the Waylay engine and internals, go to our documentation page or read the following blogs on the same subject:

- The Waylay engine: Bayesian inference-based programming using smart agents

- The curse of dimensionality in decision trees – the branching problem

- Our documentation: Rule patterns

- Creating applications with cloud functions – how to manage rules and orchestration in serverless architectures

- AI and IoT, Part 1: Challenges of applying Artificial Intelligence in IoT using Deep Learning

- AI and IoT, Part 2: Deep Learning and Bayesian Modelling, building the automation of the future

- AI and IoT, Part 3: How to apply AI techniques to IoT solutions – a smart care example